Ada-VSR: Adaptive Video Super-Resolution with Meta-Learning

ACM Multimedia 2021 (Oral Presentation)

-

Akash Gupta

UC Riverside -

Padmaja Jonnalagedda

UC Riverside -

Bir Bhanu

UC Riverside -

Amit K. Roy-Chowdhury

UC Riverside

Abstract

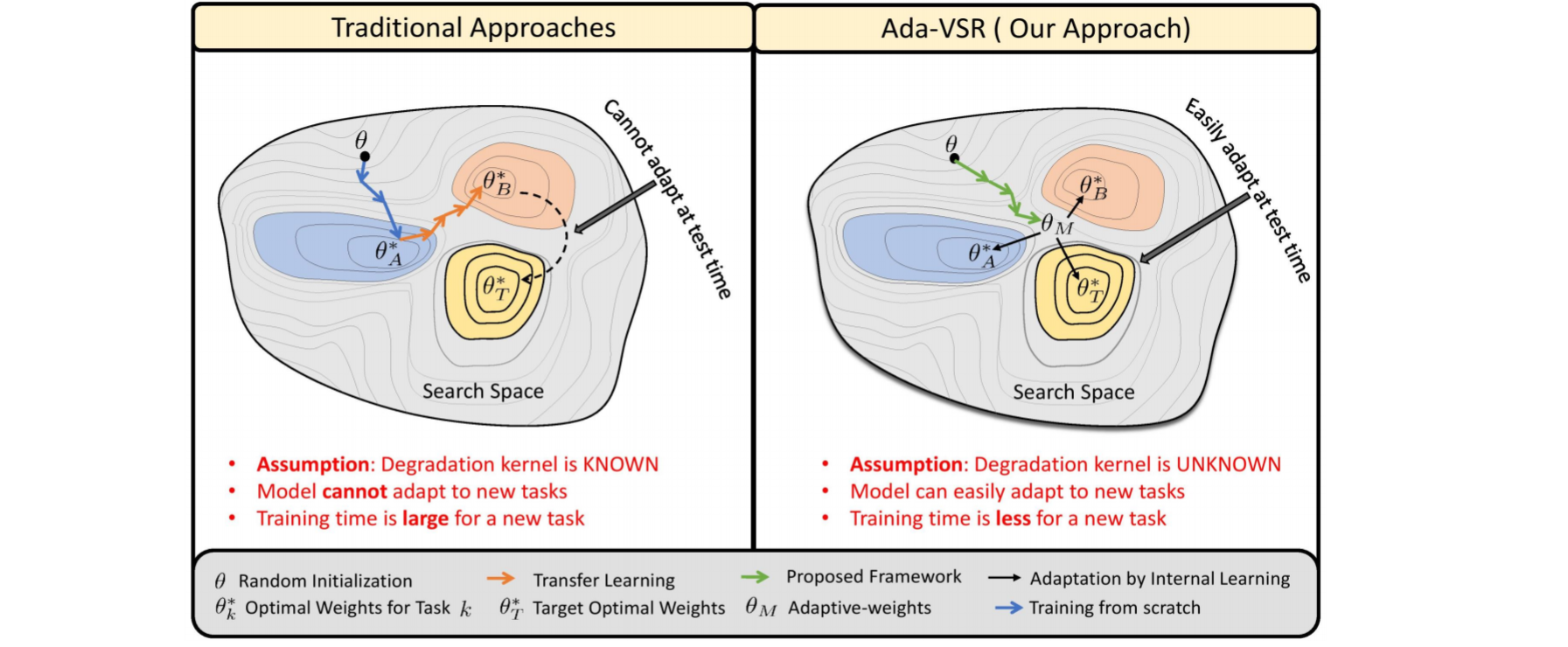

Most of the existing works in supervised spatio-temporal video super-resolution (STVSR) heavily rely on a large-scale external dataset consisting of paired low-resolution low-frame rate (LR-LFR) and high-resolution high-frame-rate (HR-HFR) videos. Despite their remarkable performance, these methods make a prior assumption that the low-resolution video is obtained by down-scaling the high-resolution video using a known degradation kernel, which does not hold in practical settings. Another problem with these methods is that they cannot exploit instance-specific internal information of a video at testing time. Recently, deep internal learning approaches have gained attention due to their ability to utilize the instance-specific statistics of a video. However, these methods have a large inference time as they require thousands of gradient updates to learn the intrinsic structure of the data. In this work, we present Adaptive VideoSuper-Resolution (Ada-VSR) which leverages external, as well as internal, information through meta-transfer learning and internal learning, respectively. Specifically, meta-learning is employed to obtain adaptive parameters, using a large-scale external dataset, that can adapt quickly to the novel condition (degradation model) of the given test video during the internal learning task, thereby exploiting external and internal information of a video for super-resolution. The model trained using our approach can quickly adapt to a specific video condition with only a few gradient updates, which reduces the inference time significantly. Extensive experiments on standard datasets demonstrate that our method performs favorably against various state-of-the-art approaches.

Downloads

Coming SoonBibTeX

@inproceedings{gupta2021ada,

title={Ada-VSR: Adaptive Video Super-Resolution with Meta-Learning},

author={Gupta, Akash and Jonnalagedda, Padmaja and Bhanu, Bir and Roy-Chowdhury, Amit K},

booktitle={Proceedings of the 29th ACM International Conference on Multimedia},

pages={327--336},

year={2021}

}

Acknowledgements

The work was partially supported by NSF grants 1664172, 1724341 and 1911197.