ALANET: Adaptive Latent Attention Network for

Joint Video Deblurring and Interpolation

Oral Presentation

ACM Multimedia 2020

Abstract

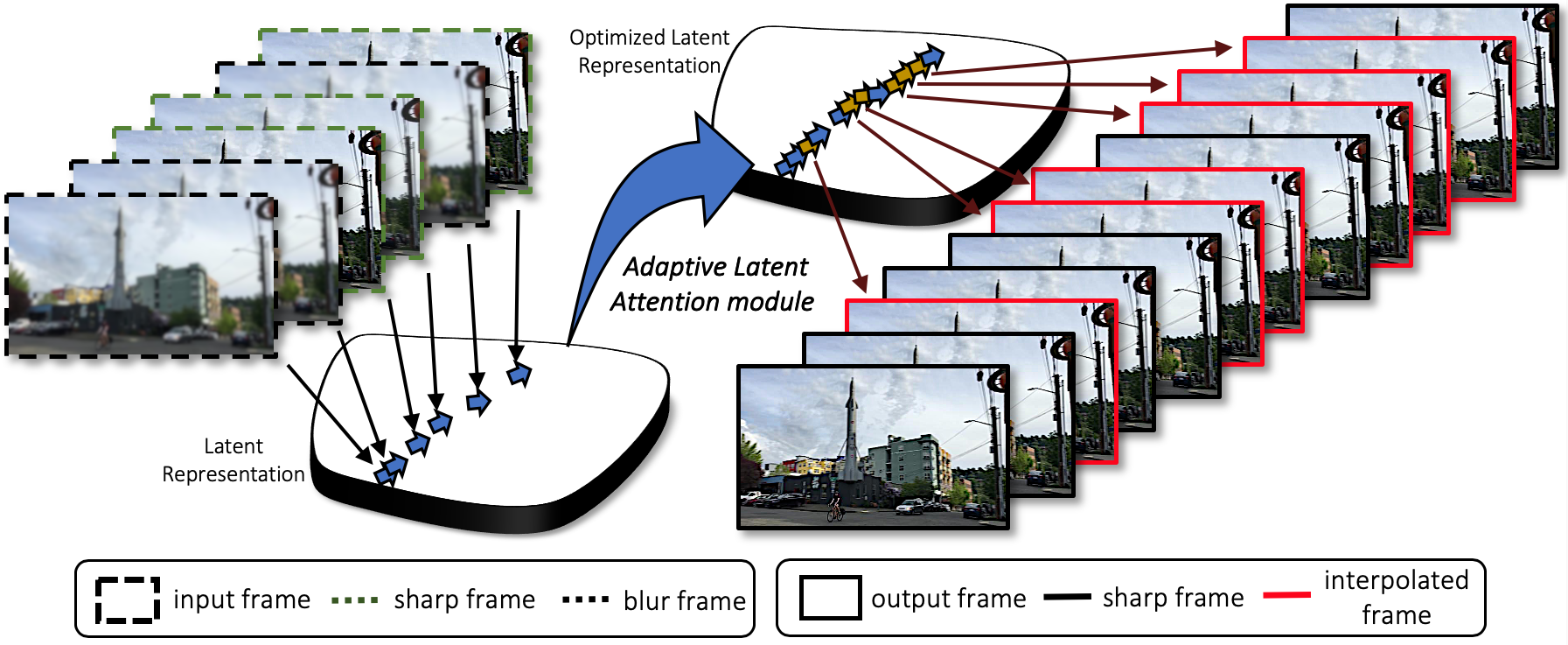

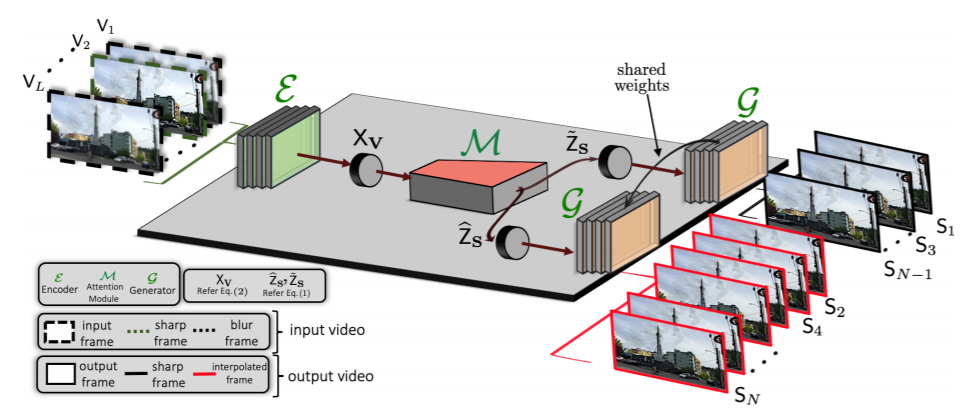

Existing works address the problem of generating high frame-rate sharp videos by separately learning the frame deblurring and frame interpolation modules. Most of these approaches have a strong prior assumption that all the input frames are blurry whereas in a real-world setting, the quality of frames varies. Moreover, such approaches are trained to perform either of the two tasks - deblurring or interpolation - in isolation, while many practical situations call for both. Different from these works, we address a more realistic problem of high frame-rate sharp video synthesis with no prior assumption that input is always blurry. We introduce a novel architecture, Adaptive Latent Attention Network (ALANET), which synthesizes sharp high frame-rate videos with no prior knowledge of input frames being blurry or not, thereby performing the task of both deblurring and interpolation. We hypothesize that information from the latent representation of the consecutive frames can be utilized to generate optimized representations for both frame deblurring and frame interpolation. Specifically, we employ combination of self-attention and cross-attention module between consecutive frames in the latent space to generate optimized representation for each frame. The optimized representation learnt using these attention modules help the model to generate and interpolate sharp frames. Extensive experiments on standard datasets demonstrate that our method performs favorably against various state-of-the-art approaches, even though we tackle a much more difficult problem.

Downloads

BibTeX

@inproceedings{gupta2020alanet,

title={ALANET: Adaptive Latent Attention Network for Joint Video Deblurring

and Interpolation},

author={Gupta, Akash and Aich, Abhishek and Roy-Chowdhury, Amit K.},

booktitle={Proceedings of the 27th ACM International Conference on Multimedia},

pages={256--264},

year={2020}

}

Acknowledgements

This work was partially supported by NSF grants 33397 and 33425, ONR grant N00014-18-1-2252, and a gift from CISCO. We thank Padmaja Jonnalagedda, JVL Venkatesh and JV Megha for valuable discussions and feedback on the paper.

Code

Code

Slides

Slides